|

Jian Chen I am a first-year Ph.D. student in HDSI at University of California San Diego, where I am working on efficient LLM under the supervision of Prof. Zhijian Liu. Previously, I completed my Master's degree in Carnegie Mellon University, where I worked with Prof. Beidi Chen on research in efficient long-context LLM serving and Prof. Ding Zhao on research in reinforcement learning. I completed my bachelor's degree at Zhejiang University. Email / Google Scholar / Github / Linkedin |

|

ResearchI am interested in machine learning and computer systems, currently focused on developing scalable, hardware-aware algorithms and systems to accelerate large machine learning models. Previously, I conducted research in generalizable reinforcement learning and hardware acceleration for machine learning. |

|

Jian Chen*, Vashisth Tiwari*, Ranajoy Sadhukhan*, Zhuoming Chen, Jinyuan Shi, Ian En-Hsu Yen, Beidi Chen ICLR 2025 Blog / ArXiv / Code A comprehensive analysis of LLM inference performance and speculative decoding speedup, identified that speculative decoding can achieve both low latency and high throughput for moderate to long sequences with an intelligent speculation strategy. |

|

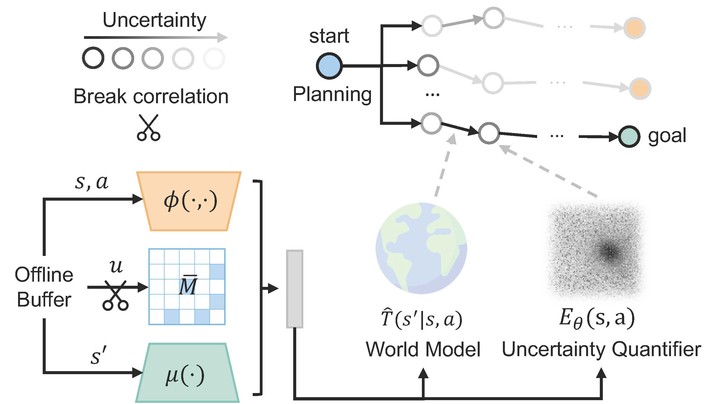

Haohong Lin, Wenhao Ding, Jian Chen, Laixi Shi, Jiacheng Zhu, Bo Li, Ding Zhao NeurIPS 2024 ArXiv / Code An algorithm utilizes low-rank Markov Decision Processes to capture causal transition dynamics, effectively addressing objective mismatch caused by distribution shifts in offline Model-based Reinforcement Learning. |

|

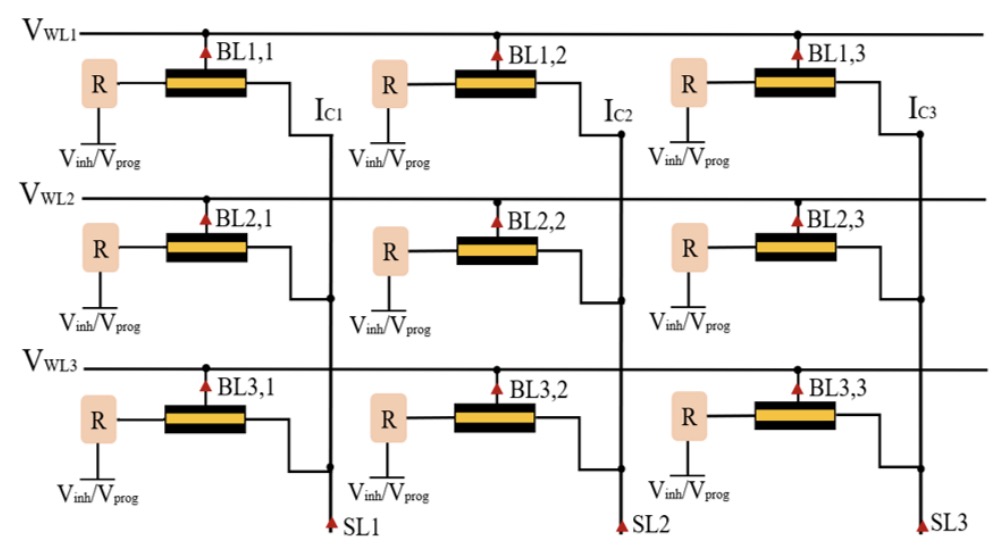

Shuxin Zhang, Jian Chen, Yumeng Wang, Zhimin Jia, Cheng Zhuo, Xunzhao Yin ISEDA 2023 Paper A novel Compute-in-Memory crossbar design utilizing Ferroelectric Transistors to enable non-von Neumann hardware acceleration of Multiply-and-Accumulate operations in Neural Networks. |